This basic explanation of the Attention Mechanism in LLMs was part of a teaching session with Google’s Gemini 2.0 Pro.

Let’s break down the attention mechanism in Large Language Models (LLMs) step-by-step.

Step 1: The Problem with Traditional Sequence Models

Before attention, models like Recurrent Neural Networks (RNNs) and LSTMs processed sequences (like sentences) one word at a time. They maintained a “hidden state” that was supposed to carry information from the entire input sequence seen so far.

Imagine you have a long sentence like: “The incredibly fast brown fox jumped over the lazy dog, which was sleeping peacefully under a tree.”

An RNN would process “The,” then update its hidden state. Then it processes “incredibly,” updating the hidden state again, and so on. By the time it gets to “tree,” the information about “The” and “incredibly” might be significantly diluted or even lost, especially in very long sequences. This is called the long-range dependency problem. The further apart two relevant words are, the harder it is for the RNN to connect them.

Basically with traditional sequence models we are squeezing all of the context into a single hidden state vector.

Step 2: The Core Idea of Attention – Relevance Scores

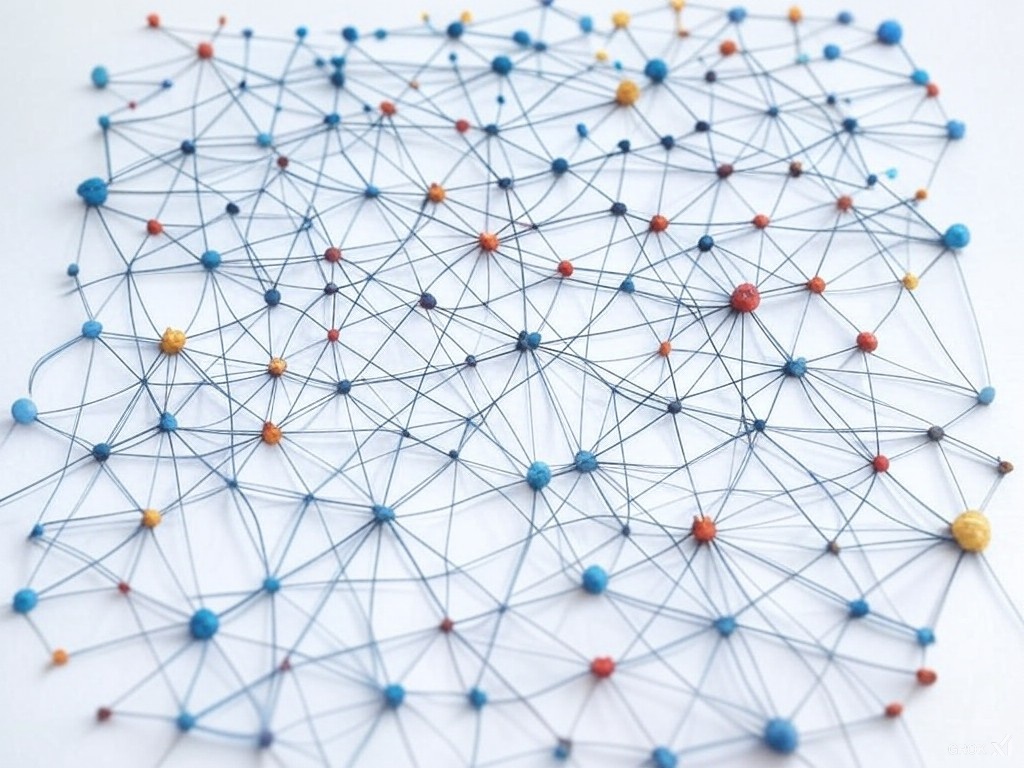

Attention introduces a way to bypass this “information bottleneck.” Instead of forcing all information through a single hidden state, attention allows the model to directly look back at all the previous words in the input and decide which ones are most relevant at each step of the output generation.

Think of it like reading a sentence and highlighting the important words that help you understand a particular part. You don’t just remember the last few words; you actively focus on the relevant words, no matter where they are in the sentence.

Attention calculates “relevance scores” (also often called “attention weights”) between each word in the input and the current word being processed or predicted. A higher score means that input word is more important in the current context. These aren’t pre-determined; they’re learned during training.

Let’s move on.

Step 3: Queries, Keys, and Values (Q, K, V)

To calculate these relevance scores, attention uses three learned components, usually derived from the input sequence itself:

- Queries (Q): Think of the query as representing “What am I looking for?”. It’s a representation of the current word or position in the sequence that we’re trying to understand or predict. It is the piece of information asking the question.

- Keys (K): Think of the keys as representing “What information do I have?”. Each word in the input sequence gets a “key” vector. Keys represent the information available, acting as an index.

- Values (V): Think of the values as representing “What is the actual content?”. Each word also gets a “value” vector. This is the actual information, what we will blend together.

These Q, K, and V vectors are typically created by multiplying the input word embeddings (or the hidden states from a previous layer) by learned weight matrices. So, we have three separate weight matrices (Wq, Wk, Wv) that are learned during training. Each weight matrix transforms an items input embedding, x, into either its Q, K, or V.

Query = Input Embedding * WqKey = Input Embedding * WkValue = Input Embedding * Wv

This is a crucial point, so let’s elaborate with an example. Let’s simplify things and say our sentence is just: “The fox jumped.” And, let’s say we’re currently processing the word “jumped.”

- Word Embeddings: First, each word is converted into a numerical vector called a word embedding. These embeddings capture semantic meaning. Let’s imagine (very unrealistically for simplicity) our embeddings are:

- “The”: [0.1, 0.2]

- “fox”: [0.9, 0.3]

- “jumped”: [0.5, 0.8]

- Learned Weight Matrices (Wq, Wk, Wv): During training, the model learns three weight matrices:

Wq,Wk, andWv. These matrices are not specific to individual words; they’re applied to all words. They transform the word embeddings into Query, Key, and Value vectors. Let’s imagine (again, very simplified) these matrices are:Wq = [[0.5, 0.1], [0.2, 0.6]]Wk = [[0.3, 0.4], [0.7, 0.1]]Wv = [[0.8, 0.2], [0.3, 0.9]] - Calculating Q, K, V: Now, we use these matrices to calculate the Query, Key, and Value vectors for each word. Since we’re focusing on “jumped,” that word’s embedding will be used to create the Query. All words’ embeddings will be used to create their respective Keys and Values.

- Query (for “jumped”):

[0.5, 0.8] * [[0.5, 0.1], [0.2, 0.6]] = [0.41, 0.53] - Keys (for all words):

"The": [0.1, 0.2] * [[0.3, 0.4], [0.7, 0.1]] = [0.17, 0.06]"fox": [0.9, 0.3] * [[0.3, 0.4], [0.7, 0.1]] = [0.48, 0.39]"jumped": [0.5, 0.8] * [[0.3, 0.4], [0.7, 0.1]] = [0.71, 0.28] - Values (for all words):

"The": [0.1, 0.2] * [[0.8, 0.2], [0.3, 0.9]] = [0.14, 0.20]"fox": [0.9, 0.3] * [[0.8, 0.2], [0.3, 0.9]] = [0.81, 0.45]"jumped": [0.5, 0.8] * [[0.8, 0.2], [0.3, 0.9]] = [0.64, 0.82]

- Query (for “jumped”):

So, in summary:

- We start with word embeddings.

- We have learned weight matrices (Wq, Wk, Wv) that are shared across all words.

- We multiply each word’s embedding by each of the weight matrices to get its Query, Key, and Value.

- The Query is derived from the word we’re currently focusing on. The Keys and Values are derived from all the words in the input.

The dimensions of the resulting Q, K, and V vectors are determined by the dimensions of the weight matrices, which are hyperparameters chosen during model design. Importantly, the dimensions of Q and K must be the same, because we’re going to compare them in the next step. The dimension of V can be different.

Okay, let’s move on to the next crucial step:

Step 4: Calculating Attention Scores (Dot Product and Softmax)

Now that we have our Queries (Q), Keys (K), and Values (V), we calculate the attention scores. This is where the “attention” really happens.

- Dot Product: We take the dot product of the Query (Q) vector (representing the current word) with each of the Key (K) vectors (representing all the words in the input). The dot product measures the similarity between two vectors. A larger dot product means the Query and Key are more aligned, suggesting higher relevance. Using our previous example, where Q (for “jumped”) is

[0.41, 0.53], and we have Keys for “The,” “fox,” and “jumped”:- Attention Score (“jumped” attending to “The”):

[0.41, 0.53] . [0.17, 0.06]=0.07 + 0.03=0.10 - Attention Score (“jumped” attending to “fox”):

[0.41, 0.53] . [0.48, 0.39]=0.20 + 0.21=0.41 - Attention Score (“jumped” attending to “jumped”):

[0.41, 0.53] . [0.71, 0.28]=0.29 + 0.15=0.44

- Attention Score (“jumped” attending to “The”):

- Scaling: Before applying softmax, the dot product scores are usually scaled down. This is typically done by dividing by the square root of the dimension of the Key vectors (√dk). This scaling is crucial for stable training, especially when the Key vectors have high dimensions. It prevents the dot products from becoming too large, which can lead to extremely small gradients during backpropagation. Let’s say the dimension of our Key vectors (dk) is 2 (as in our example). Then √dk is approximately 1.41. We’d divide each score by 1.41:

- “The”: 0.10 / 1.41 ≈ 0.07

- “fox”: 0.41 / 1.41 ≈ 0.29

- “jumped”: 0.44 / 1.41 ≈ 0.31

- Softmax: We apply the softmax function to these scaled dot products. Softmax converts the scores into a probability distribution. This means the scores will be between 0 and 1, and they will all add up to 1. This gives us the attention weights. Applying softmax to our scaled scores (approximately):

- “The”: softmax(0.07) ≈ 0.24

- “fox”: softmax(0.29) ≈ 0.35

- “jumped”: softmax(0.31) ≈ 0.36

Note that these will add up to ≈ 1

These final softmax values (0.24, 0.35, 0.36) are the attention weights. They tell us how much the word “jumped” should “attend” to each of the input words (“The,” “fox,” “jumped”). In this (simplified) example, “jumped” attends most to itself (0.36) and the “fox” (0.35), and less to “The” (0.24).

Okay, let’s continue.

Step 5: Weighted Sum and Output

We’ve calculated our attention weights (the probability distribution). Now, we use these weights to create a weighted sum of the Value vectors. This weighted sum represents the context that the model has learned is most relevant to the current word.

- Weighted Sum: Multiply each Value vector by its corresponding attention weight (from the softmax output). Then, sum up these weighted Value vectors. Recall our Value vectors from the previous example:

“The”:[0.14, 0.20]

“fox”:[0.81, 0.45]

“jumped”:[0.64, 0.82]

And our attention weights (softmax output) for “jumped”:”

“The”: 0.24″

“fox”: 0.35

“jumped”: 0.36

Now, we calculate the weighted sum:(0.24 * [0.14, 0.20]) + (0.35 * [0.81, 0.45]) + (0.36 * [0.64, 0.82]) = [0.03, 0.05] + [0.28, 0.16] + [0.23, 0.30] = [0.54, 0.51] - Output: This resulting vector

[0.54, 0.51]is the output of the attention mechanism for the word “jumped”. It’s a context-aware representation of “jumped,” taking into account the relevant information from the other words in the input, as determined by the attention weights. This output vector can then be passed on to subsequent layers of the LLM (e.g., a feed-forward network) for further processing.

In essence, the attention mechanism has created a weighted average of the Value vectors, where the weights are determined by the relevance of each word to the current word being processed. This allows the model to focus on the most important parts of the input sequence when generating the output.

Here’s a summary of the entire attention mechanism process:

Summary Attention Mechanism

1. The Problem (Long-Range Dependencies):

Traditional sequence models (RNNs, LSTMs) struggle to connect words that are far apart in a sequence (the long-range dependency problem). Information from earlier words can be lost or diluted as the sequence is processed.

2. The Core Idea (Relevance Scores):

Attention allows the model to directly look back at all previous words and determine their relevance to the current word being processed, regardless of their distance. This is done by calculating “attention weights” (relevance scores).

3. Queries, Keys, and Values (Q, K, V):

- Input Embeddings: Each word in the input sequence is first converted into a numerical vector called a word embedding.

- Learned Weight Matrices (Wq, Wk, Wv): The model learns three weight matrices:

Wq,Wk, andWv. These are shared across all words in the sequence. - Calculating Q, K, V:

Query (Q) = Input Embedding * Wq(What am I looking for?)Key (K) = Input Embedding * Wk(What information do I have?)Value (V) = Input Embedding * Wv(What is the actual content?)- The Query is calculated for the current word being processed.

- Keys and Values are calculated for all words in the input sequence.

4. Calculating Attention Scores (Dot Product, Scaling, Softmax):

- Dot Product: Calculate the dot product of the Query (Q) with each Key (K):

score = Q . K. This measures the similarity between the Query and each Key. - Scaling: Divide each dot product score by the square root of the dimension of the Key vectors (√dk):

scaled_score = score / √dk. This prevents the scores from becoming too large. - Softmax: Apply the softmax function to the scaled scores to obtain a probability distribution (attention weights):

attention_weights = softmax(scaled_score). These weights are between 0 and 1, and they sum up to 1.

5. Weighted Sum and Output:

- Weighted Sum: Multiply each Value (V) vector by its corresponding attention weight and sum the results:

context_vector = Σ (attention_weight * V). - Output: The

context_vectoris the output of the attention mechanism. It’s a weighted average of the Value vectors, representing the context relevant to the current word. This vector is then passed to subsequent layers of the LLM.

Example (Simplified):

Sentence: “The fox jumped.” Processing “jumped.”

- Embeddings (example):

- “The”: [0.1, 0.2]

- “fox”: [0.9, 0.3]

- “jumped”: [0.5, 0.8]

- Weight Matrices (example):

Wq = [[0.5, 0.1], [0.2, 0.6]] Wk = [[0.3, 0.4], [0.7, 0.1]] Wv = [[0.8, 0.2], [0.3, 0.9]] - Q, K, V (example):

- Q (“jumped”): [0.41, 0.53]

- K (“The”): [0.17, 0.06], K (“fox”): [0.48, 0.39], K (“jumped”): [0.71, 0.28]

- V (“The”): [0.14, 0.20], V (“fox”): [0.81, 0.45], V (“jumped”): [0.64, 0.82]

- Attention Scores (example):

- Dot Products: 0.10, 0.41, 0.44

- Scaled (√dk = 1.41): 0.07, 0.29, 0.31

- Softmax: 0.24, 0.35, 0.36 (attention weights)

- Weighted Sum and Output (example):

- (0.24 * [0.14, 0.20]) + (0.35 * [0.81, 0.45]) + (0.36 * [0.64, 0.82]) = [0.54, 0.51]

This final vector [0.54, 0.51] represents the context-aware representation of “jumped,” incorporating information from “The,” “fox,” and itself, weighted by their relevance.

This complete breakdown should give you a comprehensive understanding of the attention mechanism. Remember that the weight matrices (Wq, Wk, Wv) are learned during the training process, and the example values are greatly simplified for illustrative purposes. Real-world LLMs use much higher-dimensional vectors and matrices.